Drill is an Apache open-source SQL query engine for Big Data

exploration. Drill is designed to support high-performance analysis on

the semi-structured. It uses ecosystem of ANSI SQL, the industry-standard query

language. Drill provides plug-and-play integration with existing Apache Hive

and Apache HBase deployments.

Why

Drill

Top

Reasons to Use Drill:

- Get started in

minutes:It takes just a few minutes to get started with Drill.

- Schema-free JSON

model:No need to define and maintain schemas or transform data (ETL).

Drill automatically understands the structure of the data.

- Query complex,

semi-structured data in-situ:Using Drill's schema-free JSON model, you can

query complex, semi-structured data in situ. No need to flatten or

transform the data prior to or during query execution.

- Leverage

standard BI tools:Drill works with standard BI tools. You can use your

existing tools, such as Tableau,

- Access multiple

data sources:You can connect Drill out-of-the-box to file systems (local

or distributed, such as S3 and HDFS), HBase and Hive

- High

performance:Drill is designed from the ground up for high throughput and

low latency. It doesn't use a general purpose execution engine like

MapReduce, Tez or Spark. As a result, Drill is flexible (schema-free JSON

model) and performant.

High-Level

Architecture

At the core of Apache Drill is the "Drillbit" service,

which is responsible for accepting requests from the client, processing the

queries, and returning results to the client.

A Drillbit service can be installed and run on all of the required

nodes in a Hadoop cluster to form a distributed cluster environment. When a

Drillbit runs on each data node in the cluster, Drill can maximize data

locality during query execution without moving data over the network or between

nodes. Drill uses ZooKeeper to maintain cluster membership and health-check

information.

Though Drill works in a Hadoop cluster environment, Drill is not

tied to Hadoop and can run in any distributed cluster environment. The only

pre-requisite for Drill is ZooKeeper.

When you submit a Drill query, a client or an application sends

the query in the form of an SQL statement to a Drillbit in the Drill cluster. A

Drillbit is the process running on each active Drill node that coordinates,

plans, and executes queries, as well as distributes query work across the

cluster to maximize data locality.

The following image represents the communication between clients,

applications, and Drillbits:

The Drillbit that receives the query from a client or application

becomes the Foreman for the query and drives the entire query. A parser in the

Foreman parses the SQL, applying custom rules to convert specific SQL operators

into a specific logical operator syntax that Drill understands. This collection

of logical operators forms a logical plan. The logical plan describes the work

required to generate the query results and defines which data sources and

operations to apply.

The Foreman sends the logical plan into a cost-based optimizer to

optimize the order of SQL operators in a statement and read the logical plan.

The optimizer applies various types of rules to rearrange operators and

functions into an optimal plan. The optimizer converts the logical plan into a

physical plan that describes how to execute the query.

A parallelizer in the Foreman transforms the physical plan into

multiple phases, called major and minor fragments. These fragments create a

multi-level execution tree that rewrites the query and executes it in parallel

against the configured data sources, sending the results back to the client or

application.

A major fragment is a concept that represents a phase of the query

execution. A phase can consist of one or multiple operations that Drill must

perform to execute the query. Drill assigns each major fragment a

MajorFragmentID.

For example, to perform a hash aggregation of two files, Drill may

create a plan with two major phases (major fragments) where the first phase is

dedicated to scanning the two files and the second phase is dedicated to the

aggregation of the data.

Drill uses an exchange operator to separate major fragments.

Major fragments do not actually perform any query tasks. Each

major fragment is divided into one or multiple minor fragments (discussed in

the next section) that actually execute the operations required to complete the

query and return results back to the client.

Each major fragment is parallelized into minor fragments. A minor

fragment is a logical unit of work that runs inside a thread. A logical unit of

work in Drill is also referred to as a slice. The execution plan that Drill

creates is composed of minor fragments. Drill assigns each minor fragment a

MinorFragmentID.

The parallelizer in the Foreman creates one or more minor

fragments from a major fragment at execution time, by breaking a major fragment

into as many minor fragments as it can usefully run at the same time on the

cluster.

Drill executes each minor fragment in its own thread as quickly as

possible based on its upstream data requirements. Drill schedules the minor

fragments on nodes with data locality. Otherwise, Drill schedules them in a

round-robin fashion on the existing, available Drillbits.

Minor fragments can run as root, intermediate, or leaf fragments.

An execution tree contains only one root fragment. Data flows downstream from

the leaf fragments to the root fragment.

The root fragment runs in the Foreman and receives incoming

queries, reads metadata from tables, rewrites the queries and routes them to

the next level in the serving tree. The other fragments become intermediate or

leaf fragments.

Intermediate fragments start work when data is available or fed to

them from other fragments. They perform operations on the data and then send

the data downstream. They also pass the aggregated results to the root

fragment, which performs further aggregation and provides the query results to

the client or application.

The leaf fragments scan tables in parallel and communicate with

the storage layer or access data on local disk. The leaf fragments pass partial

results to the intermediate fragments, which perform parallel operations on

intermediate results.

Drill only plans queries that have concurrent running fragments.

For example, if 20 available slices exist in the cluster, Drill plans a query

that runs no more than 20 minor fragments in a particular major fragment. Drill

is optimistic and assumes that it can complete all of the work in parallel. All

minor fragments for a particular major fragment start at the same time based on

their upstream data dependency.

The following image represents components within each Drillbit:

The following list describes the key components of a Drillbit:

- RPC endpoint:

Drill exposes a low overhead protobuf-based RPC protocol to communicate

with the clients.

- SQL parser:

Drill uses Calcite, the open source SQL parser

framework, to parse incoming queries. The output of the parser component

is a language agnostic, computer-friendly logical plan that represents the

query.

- Storage plugin interface: Drill serves as a query layer on

top of several data sources.In the context of Hadoop, Drill provides

storage plugins for distributed files and HBase. Drill also integrates

with Hive using a storage plugin.

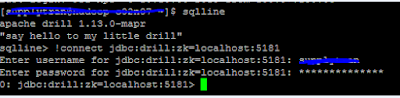

Drill

Session:

You can use a jdbc connection string to connect to SQLLine when

Drill is installed in embedded mode or distributed mode, as shown in the

following examples:

- Embedded

mode:./sqlline -u jdbc:drill:drillbit=local

- Distributed

mode:./sqlline –u jdbc:drill:zk=cento23,centos24,centos26:2181

Workspaces:

You can create your own workspace in drill. Workspace is nothing but the directory in which you can create your views / tables. You can define one or more workspaces in a storage plugin configuration.

You can create your own workspace in drill. Workspace is nothing but the directory in which you can create your views / tables. You can define one or more workspaces in a storage plugin configuration.

Attribute-workspaces". . . "location

Example-"location": "/Users/johndoe/mydata"

VIEW:

The CREATE VIEW command creates a virtual structure for the result

set of a stored query. A view can combine data from multiple underlying data

sources and provide the illusion that all of the data is from one source. You

can use views to protect sensitive data, for data aggregation, and to hide data

complexity from users. You can create Drill views from files in your local and

distributed file systems, such as Hive and HBase tables, as well as from

existing views or any other available storage plugin data sources.

The CREATE VIEW command supports the following syntax:

CREATE [OR REPLACE] VIEW [workspace.]view_name [ (column_name [,

...]) ] AS query;

Parameters

- workspace:The

location where you want the view to exist. By default, the view is created

in the current workspace.

- view_name:The

name that you give the view. The view must have a unique name. It cannot

have the same name as any other view or table in the workspace.

- column_name:Optional

list of column names in the view. If you do not supply column names, they

are derived from the query.

- query:A SELECT

statement that defines the columns and rows in the view.

The following example shows a writable workspace as defined within

the storage plugin in the /DrillView directory of the file system:

"workspaces": {

"supply_view": {

"location":

"/a/b/DrillView",

"writable": true,

"defaultInputFormat": null

}

}

Drill stores the view definition in JSON format with the name that

you specify when you run the CREATE VIEW command, suffixed by

.view.drill. For example, if you create a view named myview, Drill

stores the view in the designated workspace as myview.view.drill.

For example, i have created one view dummy in my workspace

dfs.supply_view over a hive table employee.

- Select the

workspace by command use dfs.supply_view;

- Create view

dummy as select * from hive.`default`.employee.

Note 1 :You have to use escape character as default is reserved word

in drill.

Here you can check your view file in linux file system by going to

the workspace directory which you have provided in conf file.

Note 2:For hbase and binary tables you have to use function

CONVERT_FROM.

For example

create view dfs.supply_view.mydrill_bang as SELECT

CONVERT_FROM(row_key, 'UTF8') AS name,

CONVERT_FROM(customer.addr.city, 'UTF8') AS city,

CONVERT_FROM(customer.addr.state, 'UTF8') AS state,

CONVERT_FROM(customer.`order`.numb, 'UTF8') AS numb,

CONVERT_FROM(customer.`order`.`date`, 'UTF8') AS `date`

FROM customer

WHERE CONVERT_FROM(customer.addr.city, 'UTF8')='bengaluru';

WEB

DRILL

You can run query in Drill web UI as well.The Drill Web UI

is one of several client interfaces that you can use to access Drill

Accessing

the Web UI

To access the Drill Web UI,

enter the URL appropriate for your Drill configuration. The following list describes

the URLs for various Drill configurations:

http://<IP address or

host name>:8047

Use this URL when HTTPS support is disabled (the default).

https://<IP address or host name>:8047

Use this URL when HTTPS support is enabled.

http://localhost:8047

Use this URL when running Drill in embedded mode (./drill-embedded).

Use this URL when HTTPS support is disabled (the default).

https://<IP address or host name>:8047

Use this URL when HTTPS support is enabled.

http://localhost:8047

Use this URL when running Drill in embedded mode (./drill-embedded).

On accessing drill web UI .It looks like this.

You can also check the performance of your query by going to the

profiles.A profile is a summary of metrics collected for each query that Drill

executes. Query profiles provide information that you can use to monitor and

analyze query performance. When Drill executes a query, Drill writes the

profile of each query to disk, which is either the local filesystem or a

distributed file system, such as HDFS.

You can view query profiles in the Profiles tab of the Drill Web

UI. When you select the Profiles tab, you see a list of the last 100 queries

than ran or are currently running in the cluster.

You must click on a query to see its profile.

The profile hold all the information for the query like physical

plan,visualized plan.It conatins all the info like elapsed time between hive

,total fragments,total cost.

By reading all this you can optimise your query and re-write it in

a optimised way.

Thats all.

Thanks for reading!

Bye!